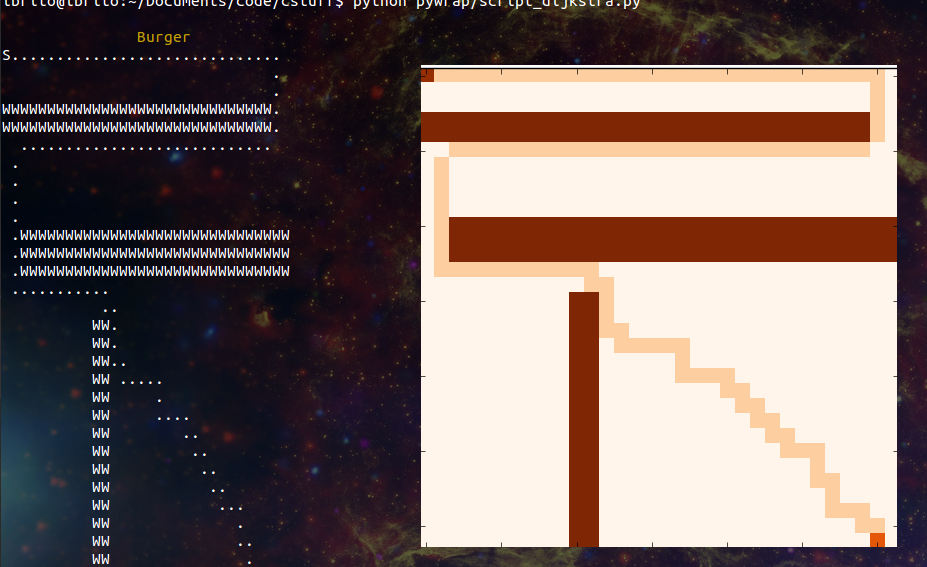

Figure 1

How do old-time languages such as C, Fortran and others survive in a world with Python, Ruby and so on?

There is plenty legacy code still around which need maintaining, of course. And there are (will always be?) a few specific applications where low level is needed. But one of the great things with software is building upon old stuff using new tools, which brings us to our topic today: building a shared library containing some of our C stuff and using it in nice and comfy Python. Figure 1 shows an example of what we can achieve by using graphical tools available in Python to improve our existing code’s text-based output. More on that later on.

For our purposes, we consider shared libraries as a collection of compiled objects condensed into a single file, which may then be called by other software. This is, of course, a simplification. A longer discussion about shared and static libraries can be found in [1].