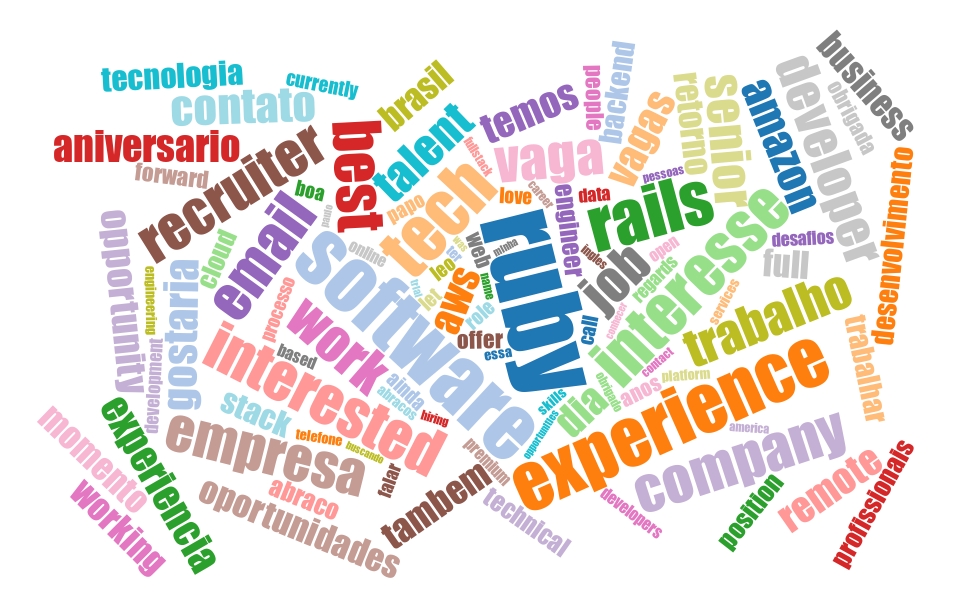

I’ve been using LinkedIn basically since I started working as an intern back in 2012. My usage is mostly limited to posting my blog posts, except the couple of times I used the platform to search for a new job. So most of the time, LinkedIn has been pretty slow-paced, with maybe half a dozen random recruiters reaching out per year.

However, since the Covid-19 pandemic started, and particularly in 2021, things seem to have gone a little crazy, with a lot more recruiter activity. I was curious to see just how much things had changed, so I looked at LinkedIn’s data export.